Real-time Stable Markerless Tracking for Augmented Reality Using Image Analysis/Synthesis Technique

Abstract

Augmented Reality (AR) developed over the past decade with many applications in entertainment, training, education, etc. Vision-based marker tracking of subject is a common technique used in AR applications over the years due to its low-cost and supporting freeware libraries like AR-Toolkit. Recent research tries to enable markerless tracking to get rid of the unnatural black/white fiducial markers which are attached a subject being tracked. Common markerless tracking techniques extract natural image features from the video image streams. However, due to perspective foreshortening, visual clutter, occlusions, sparseness and lack of texture [1], feature detection approaches are inconsistent and unreliable in different scenarios.

This project proposes a markerless tracking technique using image analysis/synthesis approach. Its task is to minimize the relative difference in image illumination between the synthesized and captured images. Through the use of a 3D geometric model and correct illumination synthesis, it promises more robust and stable results under different scenarios. However, speed and efficient initialization are its biggest concerns. The aim of our project is to investigate and produce robust real-time markerless tracking. The speed can be enhanced by reducing the number of iterations via different optimization techniques. Graphics Processors (GPU) will be exploited to accelerate the rendering and optimization by making use of parallel processing capabilities. The outcome of this project includes new reusable software modules for AR, VR, HCI applications.

Approach

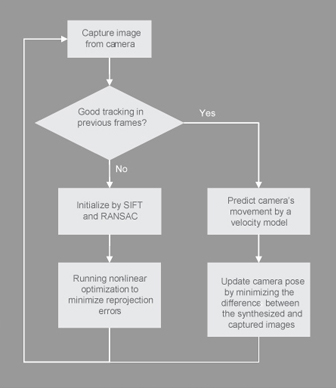

The workflow of our markerless tracking algorithm is illustrated in figure 1. To initialize the tracking process, SIFT feature matching and RANSAC-based robust method is used. Once the camera pose has been initialized, as the camera does not change a lot between two consecutive frames, the new camera pose can be quickly updated from the pose in the previous frame. A velocity model will be used first to estimate the new pose. This estimated pose will be used to synthesize a projection image of the object. After that, the pose will be iteratively updated by minimizing the differences between the synthesized and captured images. If this optimization fails, the algorithm will try to initialize again by matching SIFT features.

Fig 1. The design of our real-time markerless tracking algorithm.

Automatic Initialization

In order to implement real-time tracking, object recognition is needed to find a given object in the sequence of video frames. For any object in an image, there are many 'features' which are interesting points on the object that can be extracted to provide a "feature" description of the object. This description extracted from a training image can then be used to identify the object when attempting to locate the object in a video frame that containing many other objects. It is important that the set of features extracted from the training image is robust to changes in image scale, noise, illumination and local geometric distortion, for performing reliable recognition.

We investigated David Lowe’s Scale-invariant feature transform (or SIFT) [2], an algorithm in computer vision to detect and describe local features in images. SIFT's ability to find distinctive keypoints that are invariant to location, scale and rotation, and robust to affine transformations (changes in scale, rotation, shear, and position) and changes in illumination make it usable for object recognition. This detection and description of local image features can help in object recognition. However to perform real-time of detecting and matching the keypoints of image and video frames requires massive amount of processing from CPU. This greatly reduces the frame rate and performance.

Fortunately with the arrival of dedicated graphics hardware with increasing computational power and programmability, we are able to implement SiftGPU [3], GPU-based feature detection into our application. SiftGPU is an implementation of Scale Invariant Feature Transform (SIFT) in GPU, which able to run at very high speeds by porting the existing CPU algorithms to the GPU. Therefore we can achieve better performance in real-time detection and matching in terms of frame rates.

Using SiftGPU, we are able to extract keypoints from the input image of the object to be track and perform matching with the sequences of video frames. Once there are enough successfully matches, we applied RANSAC-based robust method to calculate the homography matrix between the image of the frame and image of the object. Then we able to estimate the 3D pose and draw a virtual 3D object on the top of the real object.

Some illustrative results

We demonstrated our application that able to perform real-time tracking and pose estimation using a rectangular object and the steps are shown in Figure 2.

Fig 2.The steps of tracking a rectangular object. (a) Feature points are detected and matched with the keypoints of the input image. (b) The 4 comers of the face are detected using the matched points. (c) The 3D pose is calculated and a virtual rectangle is drawn.

Our real-time tracking application not only robust to illuminations changes, translation, scaling and rotation changes, it also performs well with occlusion as shown in Fig 3.

Reference:

1.Debevec, P. E., Taylor, C. J., and Malik, J. 1996. Modeling and rendering architecture from photographs: a hybrid geometry- and image-based approach. In Proceedings of the 23rd Annual Conference on Computer Graphics and interactive Techniques SIGGRAPH '96. ACM Press, New York, NY, 11-20.

2.D. Lowe. Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision, 20(2):91-110, 2004.

3.C. Wu, SiftGPU: A GPU Implementation of Scale Invariant Feature Transform (SIFT), http://www.cs.unc.edu/~ccwu/siftgpu/.