HAPTIC

Abstract

In an interactive augmented reality (AR) system, users are, most of the time, immersed in an environment rich of visual and audio feedbacks. Usually the users are immersed in the AR environment with the aid of head mounted display (HMD) and speakers – seeing and hearing the 3D virtual human avatars which are rendered in a real environment. Very few prior works have included any interaction with the virtual avatars except for 3D visual changes seen through the HMD whenever the users change viewpoint. The “sense of touch” is lacking in most of the AR environment. Only a handful of research works have looked into haptic interaction of human user with the virtual avatar.

This work builds an interactive system which incorporates high fidelity haptic feedbacks to the user. We want the user to have a rich multimodal sensory experience in an interactive AR environment. Within this setup, the users can interact intuitively with the virtual avatars. Haptic interaction in AR is an interesting feature to have – imagine in a popular online virtual community environment, user can not only talk but also shake hand with the virtual avatar. One psychologist says that no other sense is more able to convince us of the reality of an object than does our sense of touch.

In this setup, user puts on a HMD which has two cameras mounted on it. He also puts on our own custom-made haptic glove. The user is allowed to freely walk around the designed area which has a bar table and a chair. Through his HMD, he is able to see a life-size, realistic virtual human avatar sitting in the chair. As the user approaches the virtual avatar and pats his shoulder, the avatar turns his head towards the user and greets him. In another scene, the avatar initiates interaction by holding out his hand. The user then extends his hand and interactively controls the avatar’s virtual hand. Through haptic feedback, the user can feel the pressure of pushing on the avatar’s hand.

.

.

Figure 1

.

.

Two separate computer vision tracking techniques are used for two different purposes. First of all, tracking of the physical environment (in order to establish the frame of reference of the user’s cameras with respect to the environment) uses Parallel Tracking and Mapping (PTAM) technique. With this the 3D virtual avatar can have a fixed frame of reference with respect to the world coordinate; and with robust tracking the avatar can be rendered in a stable manner. Secondly, tracking of the user’s glove is achieved by using the two cameras and employing stereo matching technique. Color segmentation of the glove is first carried out, followed by matching of each and every single pixel of the silhouettes from both camera images. The depth information of the glove can then be obtained.

The haptic glove consists mainly of an inflatable air bladder inserted between the linings (Figure 1). The air bladder is connected to a pneumatic system. When triggered, the pneumatic system pumps air into the bladder and creates haptic sensation to the user’s hand.

It is crucial to pump air into the bladder and subsequently release it at precise moments – when user’s hand/glove is in contact with the 3D model, air should be pumped; and when there is no contact, air should be released. Collision detection technique is used to determine whether there is contact between the user’s hand and the 3D model.

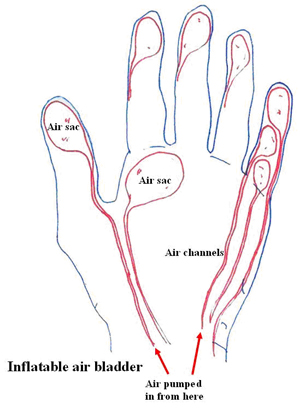

A more high-fidelity glove can be made with an inflatable air bladder which consists of many small air sacs connected to the compressed air source via micro-air channels (Figure 2). The advantage of this is that different parts of the hand get stimulated independent of the others – which si what happens to our hand if we touch something – only the points of contact feel the tactile sensation. The disadvantage is, however, the glove will be cumbersome with many air channels, albeit small.