2010 International Conference on CYBERWORLDS

Paper Abstracts

Key-note talks

Keynote 1

Nadia Magnenat-Thalmann

"A Comprehensive

Methodology to Visualize Articulations for the Physiological

Human"

Musculoskeletal disorders (MSDs) account for the largest fraction of

temporary and permanent disabilities. Osteoarthritis (OA) is one of

the most common MSDs which is characterized by a degeneration of

articular cartilages. Understanding and preventing OA are of

paramount importance in our aging yet very active society, and in

this context, computer-assisted models of articulations are highly

demanded by biomechanical and medical communities. To investigate

the causes of some idiopathic OA, we have devised a unique

comprehensive methodology to simulate musculoskeletal models of

human articulations. Built from a rich variety of acquisition

modalities (MRI, Motion capture, Body scanning, etc.) and innovative

research, these models are fully subject-specific and account for

anatomy, motion and biomechanical behavior of the articulations.

This paper presents a complete overview of the methodology with

medical validation and clinical case studies.

Keynote 2

Tosiyasu L. Kunii

"Modeling Cyberworlds

for Cloud Computing"

Emergence of cloud computing combined

with its high availability through smart devices such as smart

phones and media is requiring a transparent and highly universal

modeling of the worlds in cloud computing to overcome combinatorial

explosion of computing, that are actually cyberworlds. Hence,

appropriate scalable modeling of cyberworlds meets the requirements

effectively. Extreme diversities, versatility and dynamism of cloud

computing applications are shown to be supported by the cyberworld

modeling in an incrementally modular abstraction hierarchy (IMAH)

with homotopy extension property (HEP) and homotopy lifting property

(HLP).

Keynote 3

André Gagalowicz

"3D Tracking of

non Verbal Facial Expressions from Monocular Video Sequences"

We propose a

new technique for 3D face tracking in video sequences, which works

without markers and once initialized, doesn't require any further

interaction. A specific geometric model of the face (in a neutral

position, for example) is used as input as well as a realistic

hierarchical face animation model.

The algorithm includes a new method for precise face

expression tracking in a video sequence.It uses this hierarchical

animation system built over a morphable polyhedral 3D face model.

Its low level animation mechanism is based upon MPEG-4 specification

which is implemented via local point-driven mesh deformations

adapted to the face geometry. The set of MPEG-4 animation parameters

is in its turn controlled by a higher level system based upon facial

muscles structure. This allows to perform precise tracking of

complicated non verbal facial expressions as well as to produce

face-to-face retargeting by transmitting the expression parameters

to different faces. We finally show how to obtain an automatic

semantic interpretation of the expressions from the former tracking

results.

Keynote 4

Dieter Fellner

"3D

Semantics Pipeline: Creating, Handling and Visualization of

Semantically-Enriched Models"

Building virtual worlds - in particular when we model the worlds

to match existing reality or environments from the past where

remnants are still around - is typically either done by gifted

people modeling with standard CAD tools or with digitization

campaigns based on photogrammetric technologies like Ultracam et al.

Unfortunately, both approaches - so far - only produce large amounts

of textured triangle meshes which, among many other deficiencies,

lack semantic structure in the sense that the visual / geometric

representation of objects or building blocks in these virtual worlds

is not adequately stored as explicit (high-level) semantic entities

but as a sheer mass of low-level planar approximation entities like

3D points or triangles.

The talk will motivate a change in the way we represent

virtual worlds and argue that we do not have to discard all the

tools and workflows we have developed over time but to augment them

with a 'semantic dimension' which will not only improve the quality

of the final result but also make life significantly easier in all

stages of the acquisition pipeline.

Dinner Talk

Yuri Baturin

"Cyberworlds as a Virtual Bridge between Perceptive Space and Outer Space"

The Virtual Reality appears very useful to creation of space simulators. It is especially important to learn а cosmonaut to perceive correctly the space. The mistake, for example, at rendevous spacecrafts is fraught with accident. VR-based simulators can provide:

- preflight 3D navigation practice for the cosmonauts;

- manipulative rendezvous and docking training;

- mental visualization of the relative orientation of space modules.

The Problem is that features of human perception of space under weightlessness conditions are studied insufficiently.

The Brain intuitively solves a task of perception. Саn we force a brain to be mistaken? To put a challenge?

During my space flights I used imp-art. style figures.

- Study on experiments of visual perception based on visual illusions suggest a role of the gravitational reference in the 3D representation of the objects and the environment.

- Color sensibility is changed in microgravity condition.

Setting different parameters of visual perception can promote VR-based simulators for cosmonauts.

Paper Sessions

Human-Computer Interaction

FP: Diego Martínez, Arturo S. García,

José P. Molina Massó, Jonatan Martínez, Pascual González (Spain)

AFreeCA:

Extending the spatial model of interaction

This paper analyses the Spatial Model of

Interaction, a model that rules the possible interactions among two

objects and that has been widely accepted and used in many CVE

systems. As a result of this analysis, some deficiencies are

revealed and a new model of interaction is proposed. Additionally, a

prototype illustrating some of the best features of this model of

interaction is detailed.

FP: Xiyuan Hou, Olga Sourina (Singapore)

Haptic Rendering Algorithm for Biomolecular Docking with Torque

Force

Haptic

devices enable the user to manipulate the molecules and feel

interactions during the docking process in virtual environment on

the computer. Implementation of torque feedback allows the user to

have more realistic experience during force simulation and find the

optimum docking positions faster. In this paper, we propose a haptic

rendering algorithm for biomolecular docking with torque force. It

enables the user to experience six degree-of-freedom (DOF) haptic

manipulation in docking process. The linear smoothing method was

proposed to improve stability of the haptic rendering during

molecular docking.

FP: Bastian Migge, Andreas Kunz

(Switzerland)

User Model for Predictive Calibration Control on Interactive Screens

On interactive surfaces, a precise calibration of the tracking system is necessary for an exact user interaction. So far, common calibration techniques focus on eliminating geometric distortions. This static calibration is only correct for a specific viewpoint of one single user and parallax error distortions still occur if this changes (i.e. if the user moves in front of the digital screen). In order to overcome this problem, we present an empirical model of the user’s position and movement in front of a digital screen. With this, a model predictive controller is able to correct the parallax error for future positions of the user. We deduce the model’s parameters from a user study on a large interactive whiteboard, in which we measured the 3D position of the user’s viewpoint during common interaction tasks.

FP: Lei Wei, Alexei Sourin (Singapore)

Haptic Rendering of Mixed Haptic Effects

Commonly, surface and solid haptic effects are separated for haptic rendering. We propose a method for defining surface and solid haptic effects as well as various force fields in 3D cyberworlds containing mixed geometric models, including polygon meshes, point clouds, image-based billboards and layered textures, voxel models and functions-based models of surfaces and solids. We also propose a way how to identify location of the haptic tool in such haptic scenes as well as consistently and seamlessly determine haptic effects when the haptic tool moves in the scenes with objects having different sizes, locations, and mutual penetrations.

FP: Zhiqiang Luo, Chih-Chung Lin,

I-Ming

Chen, Song Huat Yeo, Tsai-Yen Li (Singapore)

Building Hand Motion-Based Character Animation: The Case of Puppetry

Automatic motion generation for digital character under the real-time user control is a challenging problem for computer graphic research and virtual environment applications such as on-line games. The present study introduces a methodology to generate a glove puppet animation which is controlled by a new input device, called the SmartGlove, capturing the hand motion. An animation system is proposed to generate the puppet animation based on the procedural animation and motion capture data from SmartGlove. As the control of the puppet character in the animation takes into account the design of SmartGlove and the operation of the puppet in reality, the physical hand motion can either activate the designed procedural animation through motion recognition or tune the parameters of the procedural animation to build the new puppet motion, which allows the direct user control on the animation. The potential application and improvement of the current animation system are also discussed.

FP: Mathieu Hopmann, Daniel Thalmann,

Frédéric Vexo (Switzerland)

Virtual Shelf: Sharing Music Between People and

Devices

Digital media are more and more present in our lives, but we are still waiting for interfaces and devices completely adapted to this content. In this paper, we present the virtual shelf, an application dedicated to interact with our digital music collection. Our concept is to visualize our music collection in a familiar environment, a classic CD shelf, and to interact with it in a natural way, using the well-known drag-and-drop paradigm: you can drop an album in your friend’s shelf to automatically add it to his/her personal music collection, or you can drop it on a close sound player in order to play the selected album on this device.

FP: Youngjun Kim, Sang Ok Koo, Deukhee Lee,

Laehyun Kim, Sehyung Park (Korea)

Mesh-to-Mesh Collision Detection by Ray Tracing for Medical

Simulation with Deformable Bodies

We propose a robust mesh-to-mesh collision detection algorithm using a ray tracing method. The algorithm checks all vertices of a geometrical object based on the proposed criteria, and then the colliding vertices are detected. In order to realize a real-time calculation, acceleration by spatial subdivision is performed. Since the proposed ray-traced collision detection method can directly calculate the reacting forces between the colliding objects, this method is apt for a real-time medical simulation dealing with deformable organs. Our method addresses the limitation of the previous ray-traced approach as it can detect collisions between all arbitrarily shaped objects, including non-convex or sharp objects. Moreover, deeply penetrated collisions can be detected effectively.

SP:

Dennis Reidsma, Wolfgang

Tschacher, Fabian Ramseyer, Anton Nijholt (Netherlands)

Measuring Multimodal Synchrony for Human-Computer Interaction

Nonverbal synchrony is an important and natural element in human-human interaction. It can also play various roles in human-computer interaction. In particular this is the case in the interaction between humans and the virtual humans that inhabit our cyberworlds. Virtual humans need to adapt their behavior to the behavior of their human interaction partners in order to maintain a natural and continuous interaction synchronization. This paper surveys approaches to modeling synchronization and applications where this modeling is important. Apart from presenting this framework, we also present a quantitative method for measuring the level of nonverbal synchrony in an interaction and observations on future research that allows embedding such methods in models of interaction behavior of virtual humans.

SP:

Senaka Amarakeerthi, Rasika

Ranaweera, Michael Cohen (Japan)

Speech-based Emotion Characterization using Postures and Gestures

in CVEs

Collaborative Virtual Environments (CVEs) have become increasingly popular in the past two decades. Most CVEs use avatar systems to represent each user logged into a CVE session. Some avatar systems are capable of expressing emotions with postures, gestures, and facial expressions. In previous studies, various approaches have been explored to convey emotional states to the computer, including voice and facial movements. We propose a technique to detect emotions in the voice of a speaker and animate avatars to reflect extracted emotions in real-time. The system has been developed in “Project Wonderland,” a Java-based open-source framework for creating collaborative 3D virtual worlds. In our prototype, six primitive emotional states— anger, dislike, fear, happiness, sadness, and surprise— were considered. An emotion classification system which uses short time log frequency power coefficients (LFPC) to represent features and hidden Markov models (HMMs) as the classifier was modified to build an emotion classification unit. Extracted emotions were used to activate existing avatar postures and gestures in Wonderland.

SP:

Di Liu, Andy W. H. Khong

(Singapore)

Improvement of Speech Source Localization in Noisy Environment Using Overcomplete Rational-Dilation Wavelet Transforms

The generalized cross-correlation using the phase transform prefilter remains popular for the estimation of time differences- of-arrival. However it is not robust to noise and as a consequence, the performance of direction-of-arrival algorithms is often degraded under low signal-to-noise condition. We propose to address this problem through the use of a wavelet-based speech enhancement technique since the wavelet transform can achieve good denoising performance. The overcomplete rational-dilation wavelet transform is then exploited to effectively process speech signals due to its higher frequency resolution. In addition, we exploit the joint distribution of the speech in the wavelet domain and develop a novel local noise variance estimator based on the bivariate shrinkage function. As will be shown, our proposed algorithm achieves good direction-of-arrival performance in the presence of noise.

SP:

Kwang-Eun Ko, Kwee-Bo Sim

(Korea)

Development of a Facial Emotion Recognition Method based on

combining AAM with DBN

In this paper, novel methods for facial emotion recognition in facial image sequences are presented. Our facial emotional feature detection and extracting based on Active Appearance Models (AAM) with Ekman’s Facial Action Coding System (FACS). Our approach to facial emotion recognition lies in the dynamic and probabilistic framework based on Dynamic Bayesian Network (DBN) with Kalman Filter for modeling and understanding the temporal phases of facial expressions in image sequences. By combining AAM and DBN, the proposed method can achieve a higher recognition performance level compare with other facial expression recognition methods. The result on the BioID dataset show a recognition accuracy of more than 90% for facial emotion reasoning using the proposed method.

SP:

Shahzad Rasool, Alexei

Sourin (Singapore)

Towards Tangible Images and Video in Cyberworlds – Function-based

Approach

Haptic interaction is commonly used with 3D objects defined by their geometric and solid models. Extension of the haptic interaction to 3D Cyberworlds is a challenging task due to the Internet bandwidth constraints and often prohibitive sizes of the models. We study how to replace visual and haptic rendering of shared 3D objects with 2D image visualization and 3D haptic rendering of the forces reconstructed from the images or augmenting them, which will eventually simulate realistic haptic interaction with 3D objects. This approach allows us to redistribute the computing power so that it can concentrate mainly on the tasks of haptic interaction and rendering. We propose how to implement such interaction with small function descriptions of the haptic information augmenting images and video. We illustrate the proposed ideas with the function-based extension of VRML and X3D.

SP:

Amir Sulaiman, Kirill

Poletkin, Andy W H Khong (Singapore)

Source Localization in the Presence of Dispersion for Next

Generation Touch Interface

We propose a new paradigm of touch interface that allows one to convert daily objects to a touch pad through the use of surface mounted sensors. To achieve a successful touch interface, localization of the finger tap is important. We present an inter-disciplinary approach to improve source localization on solids by means of a mathematical model. It utilizes mechanical vibration theories to simulate the output signals derived from sensors mounted on a physical surface. Utilizing this model, we provide an insight into how phase is distorted in vibrational waves within an aluminium plate which in turn serves as a motivation for our work. We then propose a source localization algorithm based on the phase information of the received signals. We verify the performance of our algorithm using both simulated and recorded data.

Shape Modeling for Cyberworlds

FP: Ming-Yong Pang, Yun Sheng, Alexei

Sourin, Gabriela Gonzáes Castro, Hassan Ugail (Singapore, UK)

Automatic Reconstruction and Web Visualization of Complex PDE Shapes

Various Partial Differential Equations (PDE) have been used in computer graphics for approximating surfaces of geometric shapes by finding solutions to PDEs subject to suitable boundary conditions. The PDE boundary conditions are defined as 3D curves on the surface of the shapes. We propose how to automatically derive these curves as boundaries of curved patches on the surface of the original polygon mesh. The analytic solution to the PDE used throughout this work is fully determined by finding a set of coefficients associated with parametric functions according to the particular set of boundary conditions. The PDE coefficients require an order of magnitude smaller space compared to the original polygon data and can be interactively rendered with different level of detail. It allows for an efficient exchange of the PDE shapes in 3D Cyberworlds and their web visualization. In this paper we analyze and formulate the requirements for extracting suitable boundary conditions, describe the algorithm for the automatic deriving of the boundary curves, and present its implementation as a part of the function-based extension of VRML and X3D.

FP: Kai Wang, Jianmin Zheng, Hock-Soon Seah

(Singapore)

Reference Plane Assisted Sketching Interface for 3D

Freeform Shape

Design

This paper presents a sketch-based modeling system with auxiliary planes as references for 3D freeform shape design. The user first creates a rough 3D model of arbitrary topology by sketching some contours of the model. Then the user can use sketching to perform deformation, extrusion, etc, to edit the model. To regularize and interpret the user’s inputs properly, we introduce some rules for the strokes into the system, which are based on both the semantic meaning of the sketched strokes and human psychology. Unlike other sketching systems, all the creation and editing operations in the presented system are performed with reference to some auxiliary planes that are automatically constructed based on the users sketches or default settings. The use of reference planes provides a heuristic solution to the problem of ambiguity of 2D interface for modeling in 3D space. Examples demonstrate that the presented system can allow the user to intuitively and intelligently create and edit 3D models even with complex topology, which is usually difficult in other similar sketch-based modeling systems.

FP: Ming-Yong Pang (China)

Optimizing Triangulation of Implicit Surface Based on Quadric Error

Metrics

In this paper, based on quadric error metrics, we present an hybrid approach to optimize triangulation created from implicit surface with sharp features. The approach first uses a resampling process to update vertices positions of initial triangulation. A dual mesh of the triangulation is at the same time constructed to restrict the updated positions and to project the new positions onto the implicit surface. Each vertex position of the updated triangulation is then optimized by minimizing the squared distances from the vertex to tangent planes of the implicit surface at the corresponding vertex of the modified dual mesh. The optimization process combines with a curvature-dependent adaptive mesh subdivision. Our method uses an error metrics to measure deviation between the vertices in final triangulation and the implicit surface.

FP: Simant Prakoonwit (United Kingdom)

3D Reconstruction from Few Silhouettes Using Statistical

Models and

Landmark Points

3D model reconstruction is an important issue in computer graphics, computer vision and virtual reality. This paper presents a new method in rapid reconstruction of detailed 3D surface models from a small number, e.g. 3-4, of 2D silhouettes of objects taken from different views. A statistical shape model is used to fit a set of landmarks points, which are automatically created from the 2D silhouettes, to reconstruct a full detailed surface. The landmark points are optimally distributed to guarantee that the salient features of objects are included in the reconstruction process. Some proof-of- concept computational experiments have been conducted to reconstruct a test object. The results show that the proposed method is capable of reconstructing an acceptable detailed 3D surface.

Simulation and Training

FP: Stefan Rilling, Ulrich Wechselberger,

Stefan Müller (Germany)

Bridging the Gap Between Didactical Requirements and Technological

Challenges in Serious Game Design

Game based training has gained a lively interest within the industry. We present a interdisciplinary work on the application of computer game principles and techniques within an automation industry training scenario. A data flow based component architecture forms the technical foundation of our system and enables us to exploit the capabilities of available middleware components like game- or physics engines. An interactive simulation of a real automation plant is built by the combination of state-based and physical object behavior. A model of training scenarios founded on the didactical principles of game based training is built upon our implemented system.

FP: Md. Tanvirul Islam, Kaiser Md.

Nahiduzzaman, Why Yong Peng, Golam Ashraf (Singapore)

Learning Character Design from Experts and Laymen

The use of pose and proportion to represent character traits is well established in art and psychology literature. However, there are no Golden Rules that quantify a generic design template for stylized character figure drawing. Given the wide variety of drawing styles and a large feature dimension space, it is a significant challenge to extract this information automatically from existing cartoon art. This paper outlines a game-inspired methodology for systematically collecting layman perception feedback, given a set of carefully chosen trait labels and character silhouette images. The rated labels were clustered and then mapped to the pose and proportion parameters of characters in the dataset. The trained model can be used to classify new drawings, providing valuable insight to artists who want to experiment with different poses and proportions in the draft stage. The proposed methodology was implemented as follows: 1) Over 200 full-body, front-facing character images were manually annotated to calculate pose and proportion; 2) A simplified silhouette was generated from the annotations to avoid copyright infringements and prevent users from identifying the source of our experimental figures; 3) An online casual roleplaying puzzle game was developed to let players choose meaningful tags (role, physicality and personality) for characters, where tags and silhouettes received equitable exposure; 4) Analysis on the generated data was done both in stereotype label space as well as character shape space; 5) Label filtering and clustering enabled dimension reduction of the large description space, and subsequently, a select set of design features were mapped to these clusters to train a neural network classifier. The mapping between the collected perception and shape data give us quantitative and qualitative insight into character design. It opens up applications for creative reuse of (and deviation from) existing character designs.

FP: Shang-Ping Ting, Suiping Zhou,

Hu Nan

(Singapore)

A Computational Model of Situation Awareness for MOUT Simulations

Situation awareness is the perception of environmental elements within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future. The quality of situation awareness directly affects the decision making process for human soldiers in Military Operations on Urban Terrain (MOUT). It is therefore important to accurately model situation awareness in order to generate realistic tactical behaviors for the non-player characters (also known as bots) in MOUT simulations. This is a very challenging problem due to the time constraints in decision-making process and the heterogeneous cue types involved in MOUT. Although there are some theoretical models on situation awareness, they generally do not provide computational mechanisms suitable for MOUT simulations. In this paper, we propose a computational model of situation awareness for the bots in MOUT simulations. The computational model aims to form up situation awareness quickly with some key cues of the tactical situation. It is also designed to work together with some novel features that help to produce realistic tactical behaviors. These features include case-based reasoning, qualitative spatial representation and expectations. The effectiveness of the computational model is assessed with Twilight City, a virtual environment that we have built for MOUT simulations.

SP:

Ungyeon Yang, Gun A. Lee, Yongwan Kim, Dongsik Jo, Jin Sung Choi, Ki-Hong Kim (Korea)

Virtual Reality based Welding Training Simulator with 3D Multimodal

Interaction

In this paper, we present a prototype Virtual Welding Simulator, which supports interactive training of welding process by using multimodal interface that can deliver realistic experiences. The goal of this research is to overcome difficult problems by using virtual reality technology in training tasks where welding is treated as a principal manufacturing process. The system design and implementation technical issues are described including real-time simulation and visualization of welding bead, providing realistic experience through 3D multimodal interaction, presenting visual training guides for novice workers, and visual and interactive interface for training assessments. According to the results from an initial user study, the prototype VR based simulator system appears to be helpful for training welding tasks, especially in terms of providing visual training guides and instant training assessment.

Data Mining and Cybersecurity

FP: Richmond Hong Rui Tan, Flora S. Tsai

(Singapore)

Authorship Identification for Online Text

Authorship identification for online text such as blogs and e-books is a challenging problem as these documents do not have a considerable amount of content. Therefore, identification is much harder than other documents such as books and reports. The paper investigates the choice of features and classifier accuracy which are suitable for such texts. Syntactic features are found to be good for large data sets, whereas lexical features are good for small data sets. The results can be used to customize and further improve authorship detection techniques according to the characteristics of the writing samples.

FP: Sascha Hauke, Martin Pyka, Markus

Borschbach, Dominik Heider (Germany)

Harnessing Recommendations from Weakly Linked Neighbors in

Reputation-based Trust Formation

Interactions between individuals are inherently dependent upon trust, no matter if they occur in the real world or in cybercommunities. Over the past years, proposals have been made to model trust relations computationally, either to assist users or for modeling purposes in multi-agent systems. These models rely implicitly on the social networks established by participating entities (be they autonomous agents or internet users). However, state-of-the-art trust frameworks often neglect the structure of those complex networks. In this paper, we present a new approach allowing agent-based trust frameworks to leverage information from so-called weak ties that would otherwise be neglected. An effective and robust voting scheme based on an agreement metric is presented and its benefit is shown through simulations.

FP: Thomas Monoth, Babu Anto P (India)

Contrast-Enhanced Visual Cryptography Schemes Based on Additional

Pixel Patterns

Visual cryptography is a kind of secret image sharing scheme that uses the human visual system to perform the decryption computations. A visual cryptography scheme allows confidential messages to be encrypted into k-out-of-n secret sharing schemes. Whenever the number of participants from the group (n) is larger than or equal to the predetermined threshold value (k), the confidential message can be obtained by these participants. Contrast is one of the most important parameters in visual cryptography schemes. Usually, the reconstructed secret image will be darker (through contrast degradation) than the original secret image. The proposed scheme achieves better contrast and reduces the noise in the reconstructed secret image without any computational complexity. In this method, additional pixel patterns are used to improve the contrast of the reconstructed secret image. By using additional pixel patterns for the white pixels, the contrast of the reconstructed secret image can be improved than in the case of existing visual cryptography schemes.

FP: Marina Gavrilova, Roman

Yampolskiy

(Canada)

Applying Biometric Principles to Avatar Recognition

Domestic and industrial robots, intelligent software agents, virtual world avatars and other artificial entities are quickly becoming a part of our everyday life. Just like it is necessary to accurately authenticate identity of human beings, it is becoming essential to be able to determine identities of non-biological agents. In this paper, we present the current state of the art in virtual reality security, focusing specifically on emerging methodologies for avatar authentication. We also outline future directions and potential applications for this high impact research field.

FP: Najmus Saqib Malik, Friedrich Kupzog,

Michael Sonntag (Pakistan)

An

Approach to Secure Mobile Agents in Automatic Meter Reading

Mobile agent is a suitable paradigm to collect information from multiple sites in a distributed environment. As compare to other technologies, mobile agents can be used beneficially for Automatic Meter Reading (AMR) and to measure power quality information at each energy meter.. The energy meter contains embedded system, so the choice of agent platform for such an application is very important. This article investigates different methods cited in literature that used mobile agent paradigm for AMR process. It proposes a method that reduces the total security computation cost which is incurred in AMR process. In this method, energy meters are organized in the form of a group based upon the geographical location. In one location energy meters perform their jobs under a security manager. The concept of local mobile agent is proposed to avoid the visit of external mobile agent to energy meters directly. Local mobile agent carries the acceptable queries from security manager and visits energy meters. Mathematical modeling is used to represent the security computation cost incurred by each method cited in literature and compared it with the propose method. It is concluded that the proposed mechanism reduces the security computation cost considerably, compared to other methods.

FP: Haichang Gao, Zhongjie Ren, Xiuling

Chang, Xiyang Liu, Uwe Aickelin (China)

A New Graphical Password Scheme Resistant to Shoulder-Surfing

Shoulder-surfing is a known risk where an attacker can capture a password by direct observation or by recording the authentication session. Due to the visual interface, this problem has become exacerbated in graphical passwords. There have been some graphical schemes resistant or immune to shoulder-surfing, but they have significant usability drawbacks, usually in the time and effort to log in. In this paper, we propose and evaluate a new shoulder-surfing resistant scheme which has a desirable usability for PDAs. Our inspiration comes from the drawing input method in DAS and the association mnemonics in Story for sequence retrieval. The new scheme requires users to draw a curve across their password images orderly rather than click directly on them. The drawing input trick along with the complementary measures, such as erasing the drawing trace, displaying degraded images, and starting and ending with randomly designated images provide a good resistance to shouldersurfing. A preliminary user study showed that users were able to enter their passwords accurately and to remember them over time.

Shared Virtual Worlds and Games

FP: Zin-Yan Chua, Yilin Kang, Xing Jiang, Kah-Hoe Pang, Andrew C. Gregory, Chi-Yun Tan, Wai-Lun Wong, Ah-Hwee Tan, Yew-Soon Ong, Chun-Yan Miao (Singapore)

Youth Olympic Village Co-Space

We have designed and implemented a 3D virtual world based on the Co-Space concept encompasses the Youth Olympic Village (YOV) and several sports competition venues. It is a massively multiplayer online (MMO) virtual world built according to the actual, physical locations of the YOV and sports competition venues. On top of that, the Co-Space is being populated with human-like avatars, which are created according to the actual human size and appearance; they perform their activities and interact with the users in realworld context. In addition, autonomous intelligent agents are integrated into the Co-Space to provide context-aware and personalized services to the users. These agents, which are in the form of human-like avatars, act as the users’ personal tour guides; they interact with the users and gradually learn the users’ preferences. Then, the agents are able to suggest suitable locations for the users to visit. The intention of deploying these agents is to enrich the Co-Space with a variety of interactions tailored to each user’s preferences instead of the same contents for everyone. Besides, our Co-Space also provides an avenue for people from different locations throughout the world to explore the YOV and experience the atmosphere of the sports events in Singapore.

FP: Inmaculada Remolar, Miguel Chover,

Ricardo Quirós,

Jesus Gumbau,

Francisco Ramos, Pascual Castelló,

Cristina Rebollo

(Spain)

Virtual Trade Fair: A Multiuser 3D Virtual World for Business

Online virtual trade fairs are becoming

more popular in the business world. They enable companies to

establish a economic trade relationship with their customers. This

article presents a multiuser virtual trade fair developed using the

technology of 3D game engines. This makes it possible to obtain a

high degree of realism and benefit from every advantage of using

this software: very real lighting, cast shadows, collision

detection, animation, etc. Moreover, the virtual trade fair is

accessible online only requiring a plug-in to be installed on the

visitor’s computer for them to be able to enter the virtual world.

In order to facilitate management of the business fair, our

application builds the 3D objects automatically. Participants in the

trade fair can customize their virtual stand and the application

will generate the necessary code for it to be included in the

rendered virtual world. In addition, some interactive objects can be

added to the virtual stand to provide fair visitors with essential

information such as business contact details, publicity catalogues

and so on. Finally, users, who are represented by avatars, can

interact with each other while they are visiting the virtual fair.

FP: Lilia Gómez

Flores, Martyn Horner (United Kingdom)

Leisure Time in Second Life:

Cultural

Differences and Similarities

This paper analyses leisure activities in Cyberworlds such as Virtual Communities (VCs) in the Internet, and how people through their avatars explore this new worlds in search of fun. It makes a cross cultural study between three different cultures represented within a VC in the Internet. The three chosen cultures are Latin American, American and British, and the VC is Second Life (SL). Te main objective is to assess if and how the cultural background of the people behind avatars influence leisure time spent in these communities, proposes a methodology using 3D avatars as researchers, and draws some conclusions about the subject.

FP: Kenji Ohmori, Tosiyasu L. Kunii (Japan)

A Formal Methodology for Developing Enterprise Systems Procedurally:

Homotopy, Pi-Calculus and Event-Driven Programs

A new approach for designing and modeling enterprise systems is described. The homotopy lifting property (HLP) is used to design an enterprise system in a bottomup way. As an example, task changes in a department are designed and implemented by our approach: the incrementally modular abstraction hierarchy (IMAH) starting at the most abstract level of homotopy and ending at the most concrete level of program codes. At first, the HLP is constructed as the most abstract level. Then, task changes and a state transition diagram, which constitute of two spaces of the HLP are defined from an abstract level to a concrete level. Then, agent diagrams are obtained in a bottom-up way. The agent diagrams are transformed from an abstract level to a concrete level until program codes written by the C-like programing language are implemented on an event-driven and multi-thread processor XMOS. While carrying out these procedures, invariants are preserved to avoid unnecessary testing, which usually consumes a large amount of time and cost in the traditional approaches. The established method is also effective in modeling and designing cyberworlds.

FP: Guanghao Low, Dion Hoe-Lian Goh, Chei

Sian Lee (Singapore)

Looking for a Good Time: Information Seeking in Mobile Content

Sharing and Gaming Environments

Indagator is a mobile application which incorporates multiplayer, pervasive gaming elements into content sharing. The application allows users to annotate physical locations with multimedia content (annotations), and concurrently, provide opportunities for play through creating and engaging interactive game elements, earning points, and socializing. This paper examines the motivations for accessing and viewing annotations in Indagator, and highlights the influence of introducing games to mobile content sharing applications in general. Participants used Indagator for one week and were thereafter interviewed. Results were drawn by triangulating data from the interview, server logs, and the content of the annotations accessed. Three overarching motivation categories for accessing annotations emerged from our analysis – Facts, Friends, and Fun. Further, participants generally felt that games would sustain their interest in accessing annotations in the long run. Implications of our work are also discussed.

FP: Jacob van Kokswijk (Belgium)

Social Control in Online Society. Advantages of Self-regulation on

the Internet

Online communities are just like real worlds: control is necessary to make for a pleasant society. This does not automatically imply government or company control. In many virtual communities there has been a kind of social control for many years now that adequately maintains order in their public virtual space. Does this mean that laws are unnecessary? Some people cry out for an Internet police that must maintain public order in the cyber-Gomorrah. However, there can be order without law. Not only is legislation unnecessary for law, but law is unnecessary for order. A field study showed that as most people find the maxim 'everyone is deemed to know the law' too hard, and as the costs of procedures are so high, it is easier to fall back on commonsense norms. In this case all three functions of law -rule formation, enforcement, and dispute resolution- are asserted by means of these informal norms. And if the costs of learning and using the law are so high, then there is little use for the government to adjust the law, since citizens will ignore it anyway. Hence, these high costs become an argument for negotiating rather than the complex governmental solutions to property rights conflicts. Why then should we make (new) rules for the Internet society, if there are (too many?) rules already? Do social conventions, control and arbitration not suffice? These and similar questions arise when we look at 'life' in the online communities. Self-regulation of online communities alone is sometimes insufficient. The government will intervene in cases of serious abuse or criminal cases. A good balance between external and internal regulation can be found by having all parties involved in the chain jointly formulate regulations, in which supervision, maintaining order, dispute resolution, and misconduct are openly organized. Draconian measures, often supported by politicians out of ignorance, will however have little effect, as common sense looks for solutions in the future and legislation is based on the past. This article describes the advantages of self-regulation on the Internet and the (im)possibilities social control offers.

FP: Andrey Leonov,

Alexander Serebrov,

Mikhail Anikushkin, Dmitriy Belosokhov, Alexander Bobkov, Evgeny

Eremchenko, Pavel Frolov, Ilya Kazanskiy, Andrey Klimenko, Stanislav

Klimenko, Viktoria Leonova, Andrey Rashidov, Vasil Urazmetov,

Valeriy Droznin, Viktor Dvigalo, Vladimir Leonov, Sergey Samoylenko,

Alexander Aleynikov,

Tikhon Shpilenok

(Russia)

Virtual

Story in Cyberspace: Valley of Geysers, Kamchatka

Virtual 3D model of the Valley of Geysers, Kamchatka provides virtual travelling and interactive storytelling in the cyberspace and serves for scientific visualization, geodynamical processes modeling, disaster preparedness and response, environmental studies, ecological education, and virtual ecotourism.

FP:

Yang-Wai Chow, Willy Susilo, Hua-Yu

Zhou (Australia)

CAPTCHA Challenges for Massively Multiplayer Online Games.

Mini-games CAPTCHAs

Botting or automated programs in Massively Multiplayer Online Games (MMOGs) has long been a problem in these networked virtual environments. The use of bots gives cheating players an unfair advantage over other honest players. Using bots, players can potentially amass a huge amount of game wealth, resources, experience points, etc. without much effort, as bot programs can be run continuously for countless hours and will never get tired. Honest players on the other hand have to spend much more time and effort in order to gather an equal amount of game resources. This destroys the fun for legitimate players, ruins the balance of the game and threatens the game developer’s revenue base as discontented players may stop playing the game. Research efforts have proposed the incorporation of CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) challenges in games to prevent or detect potential cheaters, by presenting challenges that are easy for a human to solve but are difficult for a computer to solve. However, the incorporation of CAPTCHA challenges in games is often seen in a negative light, as they are deemed to be intrusive and that they destroy the sense of immersion in the game. This research presents an approach of using CAPTCHAs in MMOGs that is both secure and adds gameplay value to the game.

Cyberlearning in Cyberworlds

FP: Jafar Al-Gharaibeh, Clinton Jeffery

(United States)

PNQ: Portable Non-Player Characters with Quests

There is a growing interest in using game-like virtual environments for education. Massively multiuser online games such as World of Warcraft employ computer controlled non-player characters (NPCs) and quest activities in training or tutoring capacities. This approach is very effective, incorporating active learning, incremental progress, and creative repetition. This paper explores ways to utilize this model in educational virtual environments, using NPCs as anthropocentric keys to organize and deliver educational content. Our educational NPC design includes a knowledge model and a user performance model, in addition to the physical traits, behavior, and dialog model necessary to make them interesting members of the environment. Web-based educational content, exercises, and quizzes are imported into the virtual worlds, reducing the effort needed to create new NPCs with associated educational content. The NPC architecture supports multi-platform NPCs in two virtual environments, our own CVE (Collaborative Virtual Environment) and Second Life.

FP:

Bin Chen, Fengru Huang, Hui Lin, Mingyuan Hu (China)

VCUHK: Integrating the Real into a 3D Campus in

Networked Virtual

Worlds

The rapid development of World Wide Web and the dominance of networked computers for information transfer and communication have enabled the rise of virtual worlds (e.g. Second Life™). This paper introduces our virtual campus-Virtual CUHK (VCUHK) project which builds a 3D virtual campus of the Chinese University of Hong Kong (CUHK) in networked virtual worlds. VCUHK is a 3D shared networked virtual world of the real campus of CUHK built with OpenSimulator platform, an open source virtual world server project that has an adaptation of the basic server functionality of the Second Life™ servers. The real CUHK campus occupies approximately the area of 2 square km with hilly terrain and diverse and unique landscape, and has a great number of buildings with very sophisticated architecture. By using Second Life™ viewer (an open source 3D virtual world viewer) or other compatible viewers, users can access VCUHK from any Internet-connected personal computer running MS windows or Linux. Users can immerse in VCUHK being anything with the form of 3D modeling avatar and experience virtual education and other diverse campus activities.

SP:

Steven J. Zuiker, J. Patrick

Williams, David Kirschner, Katherine Greer Littlefield, Manikantan

Krishnamurthy (Singapore)

Alone Together in Cyberworlds? Bridging Cyberworld Development and

Design through Education MMOs

Cyberworlds fuel innovations in development and design, but whether and how to catalyze a slow-moving interplay among them remains an open question. This paper develops an argument for organizing interdisciplinary research through and around cyberworlds in order to bridge design and development by means of a collaboratory. To this end, it provides illustrative examples of plausible intersections between social sciences design agendas and broader cyberworld development agendas.

SP:

Leon Ho Chiau Wai , Alexei

Sourin (Singapore)

Setting Cyber-instructors in Cyberspace

Creating cyber-instructors or virtual humans in cyberspace capable of maintaining conversation with learners as well as offering different educational services may become an excellent teaching tool implementing personal mentoring in Cyberworlds. In this paper, a framework of the cyberinstructor based on the commonly available software tools is proposed. The components of the framework are replaceable and can be easily tuned to particular educational needs and technical implementations. Several implementations of the cyber-instructor with various looks and feels of the HCI have been studied and implemented such as 3D talking avatar, web chat with multimedia components and chat engine communication.

Computer Vision, Augmented and Mixed Reality

FP: Xueqin Xiang, Guangxia Li, Jing Tong,

Zhigeng Pan (China)

Fast and Simple Super Resolution for Range Data

Current active 3D range sensors, such as time-of-flight cameras, enable acquiring of range maps at video frame rate. Unfortunately, the resolution of the range maps is quite limited and the captured data are typically contaminated by noise. We therefore present a simple pipeline to enhance the quality as well as improve the spatial and depth resolution of range data in real time by upsampling the depth information with the data from high resolution video camera and utilizing a new strategy to increase the sub-pixel accuracy. Our algorithm can greatly improve the reconstruction quality, boost the resolution of the range data to that of video sensor while achieving high computational efficiency for a real-time application.

FP: Hock Soon Tan, Jiazhi Xia, Ying He, YQ

Guan (Singapore)

A System for Capturing, Rendering and Multiplexing Images on

Multi-view Autostereoscopic Display

Current trends in digital display

technology show a marked interest towards 3D displays, which allow

three dimensional images to be conveyed to viewers. Among various 3D

display techniques, autostereoscopic display appears to be promising

due to the use of optical trickery at the display, allowing

glass-free viewing. However, the cost of generating and transmitting

the autostereoscopic images is usually quite high due to the huge

amount of data. Hence, it is challenging to acquire artifact-free 3D

images in real-time. This paper presents a system to generate and

display high-resolution autostereoscopic images at full HD

resolution, i.e., 1920⇑ 1080⇑ 24. We show that the video-plus-depth

data representation enables a scalable system architecture and

efficient data transition. The proposed GPU accelerated depth

image-based rendering (DIBR) algorithm and multiplexing algorithm

are able to synthesize autostereoscopic images in real-time. The

synthesized images are then displayed on the autostereoscopic screen

that is mounted on a conventional LCD monitor. We demonstrate our

system to both indoor activities and natural scenes.

FP: Kei Iwasaki, Yoshinori Dobashi,

Tomoyuki Nishita (Japan)

Interactive Lighting and Material Design for Cyber

Worlds

Interactive rendering under

complex real world illumination is essential for many applications

such as material design, lighting design, and virtual realities. For

such applications, interactive manipulations of viewpoints,

lighting, BRDFs, and positions of objects are beneficial to

designers and users. This paper proposes a system that acquires

complex, allfrequency lighting environments and renders dynamic

scenes under captured illumination, for lighting and material design

applications in cyber worlds. To capture real world lighting

environments easily, our method uses a camera equipped with a

cellular phone. To handle dynamic scenes of rigid objects and

dynamic BRDFs, our method decomposes the visibility function at each

vertex of each object into the occlusion due to the object itself

and occlusions due to other objects, which are represented by a

nonlinear piecewise constant approximation, called cuts. Our method

proposes a compact cut representation and efficient algorithm for

cut operations. By using our system, interactive manipulation of

positions of objects and realtime rendering with dynamic viewpoints,

lighting, and BRDFs can be achieved.

FP: Ray Jarvis, Nghia Ho (Australia)

Robotic Cybernavigation in Natural Known Environments

This paper concerns the

navigation of a physical robot in real natural environments which

have been previously scanned in considerable (3D and colour image)

detail so as to permit virtual exploration by cybernavigation prior

to mission replication in the real world. An on-board high speed 3D

laser scanner is used to localize the robot (determine its position

and orientation) in its working environment by applying scan

matching against the model data previously collected.

FP: D. Zhang, Y. Shen, S.K. Ong, A.Y.C. Nee

(Singapore)

An Affordable Augmented Reality based Rehabilitation System for Hand

Motions

Repetitive practices can

facilitate the rehabilitation of the motor functions of the upper

extremity for stroke survivors whose motor functions have been

impaired. An affordable rehabilitation system for hand motion would

be useful to provide intensive training to the patients. Augmented

Reality (AR) can provide an intuitive interface to the users with

programmable environment and realism feelings. In this research, a

low cost viable rehabilitation system for hand motion is developed

based on the AR technology. In this application, seamless

interaction between the virtual environment and the real hand is

supported with markers and a self-designed data-glove. The

data-glove is low cost (<200 Singapore dollars); it is used to

detect the flexing of the fingers and control the movements of the

virtual hands.

FP:

Shah Atiqur Rahman, Liyuan Li, M.K.H.

Leung (Singapore)

Human Action Recognition by Negative Space Analysis

We propose a novel region-based method to recognize human actions by analyzing regions surrounding the human body, termed as negative space according to art theory, whereas other region-based approaches work with silhouette of the human body. We find that negative space provides sufficient information to describe each pose. It can also overcome some limitations of silhouette based methods such as leaks or holes in the silhouette. Each negative space can be approximately represented by simple shapes, resulting in computationally inexpensive feature description that supports fast and accurate action recognition. The proposed system has obtained 100% accuracy on the Weizmann human action dataset and is found more robust with respect to partial occlusion, shadow, noisy segmentation and non-rigid deformation of actions than other methods.

Virtual Humans and Avatars

FP: Linbo Luo, Suiping Zhou, Wentong Cai,

Michael Lees, Malcolm Yoke Hean Low (Singapore)

Modeling Human-like Decision Making for Virtual Agents in

Time-critical Situations

Generating human-like behaviors for virtual agents has become increasingly important in many applications, such as crowd simulation, virtual training, digital entertainment, and safety planning. One of challenging issues in behavior modeling is how virtual agents make decisions given some time-critical and uncertain situations. In this paper, we present HumDPM, a decision process model for virtual agents, which incorporates two important factors of human decision making in time-critical situations: experience and emotion. In HumDPM, rather than relying on deliberate rational analysis, an agent makes its decisions by matching past experience cases to the current situation. We propose the detailed representation of experience case and investigate the mechanisms of situation assessment, experience matching and experience execution. To incorporate emotion into HumDPM, we introduce an emotion appraisal process in situation assessment for emotion elicitation. In HumDPM, the decision making process of an agent may be affected by its emotional states when: 1) deciding whether it is necessary to do a re-match of experience cases; 2) determining the situational context; and 3) selecting experience cases. We illustrate the effectiveness of HumDPM in crowd simulation. A case study for emergency evacuation in a subway station scenario is conducted, which shows how a varied crowd composition leads to different evacuation behaviors, due to the retrieval of different experiences and the variation of agents’ emotional states.

FP: Mustafa Kasap, Nadia Magnenat-Thalmann

(Switzerland)

Customizing and Populating Animated Digital Mannequins for Real-time

Application

Animated human body models are widely used in computer graphics applications. Creating such models requires extensive design efforts, specialized hardware such as body scanners, model databases and authoring tools. Customizing these characters or creating a virtual population with them requires multiple design efforts or powerful computers. In this paper we present a simple and robust method to create variously sized bodies based on a single template animated human body model. To achieve this, our method uses the skinning information attached to the model. By taking the positions of skeletal joints as a reference, our method automatically segments the body into its anatomical regions. Then, by using the anthropometric landmarks as parameters, the regions are deformed and the underlying skeletal structure is adapted to fit the new morphology. Our method preserves the skeleton-mesh integrity for variously sized bodies. We demonstrate our method by using a database of anthropometry measurements to generate a population of virtual humans generated from our single template model.

FP: Qi Xing, Wenzhen Yang, Jihui Li, Mark

M. Theiss, Jim X. Chen (United States)

3D Automatic Feature Construction System for Lower Limb Alignment

Accurate measurement of lower limb alignment is vital for orthopedic surgery planning. Based on the 3D lower limb bone models reconstructed from CT images, a 3D feature construction system is developed to find the lower limb alignment. This paper presents new methods to automatically identify the anatomic axes, mechanical axes, joint center points, and reference planes of the lower extremities. As far as we know, there is no other system that can construct all these features automatically. With the above identified features, our system can calculate the related angles of the lower limb alignment accurately for orthopedic knee surgery planning. We applied our system on two groups of specimen (with 6 pairs of lower limbs), and the resulting related angles are identical to the actual angles of the lower limb alignment.

FP: Suriati bte Sadimon,

Mohd Shahrizal

Sunar, Dzulkefli Mohamad, Habibollah Haron (Malaysia)

Computer Generated Caricature: A Survey

Caricature is a pictorial

representation of a person or subject in summarizing way by

exaggerating the most distinctive features and simplifies the common

features in order to make that subject different from others and at

the same time, preserve the likeness of the subject. Computer

Generated Caricature is developed in order to assist the user in

producing caricature automatically or semi-automatically. It is

derived from the rapid advance in computer graphics and computer

vision and introduced as a part of computer graphics’ non-photo

realistic rendering technologies as well. Recently, Computer

Generated Caricature becomes particularly interesting research topic

due to the advantageous features of privacy, security,

simplification, amusement and their explosive emergent real-world

application such as in magazine, digital entertainment, Internet and

mobile application. On the basis of the previous facts, this paper

surveys the uses of caricature in variety of applications, theories

and rules in the art of drawing caricature, how these theories are

simulated in the development of caricature generation system and the

current research trend in this field. Computer generated caricature

can be divided into two main categories based on their input data

type: human centered approach and image processing approach. Next,

process of generating caricature from input photo is explained

briefly. It also reported the state of the art techniques in

generating caricature by classifying it into four approaches:

interactive, regularity-based, learning-based and predefined

database of caricature illustration. Lastly, this paper will discuss

relevant issues, problems and several promising direction of future

research.

FP: Weihe Wu, Aimin Hao, Yongtao Zhao

(China)

Geodesic Model of Human Body

Anthropometry is widely applied to the research in skeleton extraction from surface meshes of human body. Especially the anatomical proportion can be employed as a benchmark in model segmentation and joint extraction. Unfortunately, the anatomical proportion is usually measured with the Euclidean distance, which makes it difficult to correlate it with the surface mesh. To bridge this gap, we take advantage of the property of the geodesic metrics that is invariance to rotation, translation, scaling and model pose, and propose an original geodesic model in which the length of each part of human body is measured by geodesic metrics, by which the anatomic proportions can be directly mapped to the contours of the mesh surface of human body in arbitrary pose. Combining the geodesic model with automatic extraction of feature points, we can determine the candidate scopes of joint positions and boundaries between the parts on meshes, and then refine the joint positions in the scopes using existing methods. And finally, we illustrate the utility of the geodesic model with an application to joint extraction.

FP: Siu Man Lui, Wendy Hui (Australia)

Effects of

Smiling and Gender on Trust Toward a Recommendation Agent

This study seeks to determine whether a recommendation agent’s smile and gender affect users’ trust toward the agent. We also investigate gender differences in trust toward recommendation agents of different genders and facial expressions. We present findings from a 2x2 experiment (male vs. female and smiling vs. non-smiling agents). In the context of laptop shopping, we found that on average smiling agents are perceived to be more competent than non-smiling agents and male agents are perceived to be more competent than female agents. There is a significant interaction effect between an agent's smile and gender on trust in benevolence. Specifically, male subjects tend to believe that smiling agents are more competent than nonsmiling agents, while female subjects are less sensitive to the agent’s smile. Interestingly, female subjects perceive male agents to be more competent than female agents, while male subjects are almost indifferent toward the agent’s gender. Among the female subjects, trust in benevolence is highest toward a smiling male agent. Our results suggest that the gender and facial expressions of a recommendation agent should be considered when designing a virtual salesperson for online stores.

FP:

Héctor Rafael Orozco Aguirre, Félix

Francisco Ramos Corchado, Marco Antonio Ramos Corchado, Daniel

Thalmann

(Mexico)

A Fuzzy Model to Update the Affective State of Virtual Humans: An

Approach Based on Personality

In this paper, we present a fuzzy mechanism to update in a more natural way the emotional and mood states of virtual humans. To implement this mechanism, we take into account the ten personality scales defined by Minnesota Multiphasic Personality Inventory to endow virtual humans with a real personality. In this manner, we apply different sets of fuzzy rules to change and regulate the affective state of virtual humans according to their personality, emotional and mood history, and the level of intensity of events they perceive from their environment.

FP: Michael Athanasopoulos,

Hassan Ugail, Gabriela González Castro (United Kingdom)

On the Development of an Interactive Talking Head

System

In this work we propose a talking head system for animating facial expressions using a template face generated from partial differential equations (PDE). It uses a set of preconfigured curves to calculate an internal template surface face. This surface is then used to associate various facial features with a given 3D face object. Motion retargeting is then used to transfer the deformations in these areas from the template to the target object. The procedure is continued until all the expressions in the database are calculated and transferred to the target 3D human face object. Additionally the system interacts with the user using an artificial intelligence (AI) chatterbot to generate response from a given text. Speech and facial animation are synchronized using the Microsoft Speech API, where the response from the AI bot is converted to speech.

SP:

Asako Soga, Ronan Boulic,

Daniel Thalmann (Japan)

Motion Planning and Animation Variety using Dance Motion Clips

Our goal is to create dancing crowds in cyberworlds and to use this feature to support creative endeavors such as pre-visualization of choreography and actual stage performances. In this paper we present a method of motion planning using dance motion clips. We describe a trial algorithm of collision avoidance using a grid map. We also present methods to create variety in animation for dance choreographies. As a result, we confirmed that most collisions could be avoided by the motion planning method. However, the need for some improvements in creating a conceptual dancing crowd was found.

SP:

Choong Seng Chan, Flora S.

Tsai (Singapore)

Computer Animation of Facial Emotions

Computer facial animation still remains a very challenging topic within the computer graphics community. In this paper, a realistic and expressive computer facial animation system is developed by automated learning from Vicon Nexus facial motion capture data. Facial motion data of different emotions collected using Vicon Nexus are processed using dimensionality reduction techniques such as PCA and EMPCA. EMPCA was found to best preserve the originality of the data the most compared with other techniques. Ultimately, the emotions data are mapped to a 3D animated face, which produced results that clearly show the motion of the eyes, eyebrows, and lips. Our approach used data captured from a real speaker, resulting in more natural and lifelike facial animations. This approach can be used for various applications and serve as prototyping tool to automatically generate realistic and expressive facial animation.

Brain-computer Interfaces and Cognitive Informatics

FP: Yisi Liu, Olga Sourina, Minh Khoa Nguyen (Singapore)

Real-Time EEG-based Emotion

Recognition and Visualization

Emotions accompany everyone in the daily life, playing a key role in non-verbal communication, and they are essential to the understanding of human behavior. Emotion recognition could be done from the text, speech, facial expression or gesture. In this paper, we concentrate on recognition of “inner” emotions from electroencephalogram (EEG) signals as humans could control their facial expressions or vocal intonation. The need and importance of the automatic emotion recognition from EEG signals has grown with increasing role of brain computer interface applications and development of new forms of human-centric and humandriven interaction with digital media. We propose fractal dimension based algorithm of quantification of basic emotions and describe its implementation as a feedback in 3D virtual environments. The user emotions are recognized and visualized in real time on his/her avatar adding one more so-called “emotion dimension” to human computer interfaces.

FP: Qiang Wang, Olga Sourina, Minh Khoa

Nguyen (Singapore)

EEG-based “Serious” Games Design for Medical Applications

Recently, EEG-based technology has become more popular in “serious” games designs and developments since new wireless headsets that meet consumer demand for wearability, price, portability and ease-of-use are coming to the market. Originally, EEG-based technologies were used in neurofeedback games and brain-computer interfaces. Now, such technologies could be used in entertainment, e-learning and new medical applications. In this paper, we review on neurofeedback game designs and algorithms, and propose design, algorithm, and implementation of new EEG-based 2D and 3D concentration games. Possible future medical applications of the games are discussed.

SP:

Danny Plass-Oude Bos, Boris

Reuderink, Bram van de Laar, Hayrettin Gürkök, Christian Mühl,

Mannes Poel, Dirk Heylen, Anton Nijholt

(Netherlands)

Human-Computer Interaction for BCI Games: Usability and User

Experience

Brain-computer interfaces (BCI) come with a lot of issues, such as delays, bad recognition, long training times, and cumbersome hardware. Gamers are a large potential target group for this new interaction modality, but why would healthy subjects want to use it? BCI provides a combination of information and features that no other input modality can offer. But for general acceptance of this technology, usability and user experience will need to be taken into account when designing such systems. This paper discusses the consequences of applying knowledge from Human-Computer Interaction (HCI) to the design of BCI for games. The integration of HCI with BCI is illustrated by research examples and showcases, intended to take this promising technology out of the lab. Future research needs to move beyond feasibility tests, to prove that BCI is also applicable in realistic, real-world settings.

SP:

Brahim Hamadicharef, Mufeng

Xu, Sheel Aditya (Singapore)

Brain-Computer Interface (BCI) based Musical Composition

In this paper we present, for the first time in Brain-Computer Interface (BCI) research, a novel application for music composition. We developed, based on the I2R NeuroComm BCI platform, a novel Graphical User Interface (GUI) to allow composition of short melodies by selecting individual musical notes. The user can select any individual musical key (quarter note with Do, R´e, Mi, Fa, Sol, La, Si, Do, etc.), insert a silence (quarter rest), delete (Del) the last note to correct the composition, or listen (Play) to the final melody, shown in text form and as a musical partition. Our BCI system for music composition has been successfully demonstrated and shown to be, with very short training time, effective and easy to use. This work is the first step towards a more intelligent BCI system that will extend current BCI-based Virtual Reality (VR) navigation for e.g. exploring a Virtual Synthesizer Museum/ shop, in which the user would first move around within the virtual museum/shop and at the point of interest would try the synthesizer by composing music. More advanced modes (e.g. musical puzzle) with various levels of difficulty will be added in future developments as well as using the OpenVibe environment and mobile platforms such as the Apple’s iPAD.

SP:

Liu Lin Yi, Mark Chavez

(Singapore)

Cinematics and Narratives: An

Exploitation of Real-Time Animation

The research goal of project Cinematics and Narratives is to significantly explore approaches to the development of contemporary animation. CaN is comprised of three integrated objectives; the first is focused on developing and exploiting real-time animation and content within the context of a visual and narrative design based repository of primitives; the second explores the dynamic of context, exposition and expression, mixing our design primitives into a new dynamic form; and the third goal is to interfaces this system with an audience in such a way as to enable the system to learn from viewer reaction, where the system automatically refines the design based on the emotive input of the viewer.

Networked Collaboration

FP: Andreas Kunz, Thomas Nescher, Martin

Küchler (Switzerland)

CollaBoard: A Novel Interactive Electronic Whiteboard for Remote

Collaboration with People on Content

In this paper, we present a device called ‘CollaBoard’. It was developed in the context of ongoing CSCW research efforts in developing groupware that mediates remote collaboration processes. CollaBoard combines videoand data-conferencing by overlaying a life-sized video showing the entire upper body of remote people in front of the displayed shared content. By doing so, CollaBoard shows pose, gaze, and gestures of remote partners, preserves the meaning of users’ deictic gestures when pointing at displayed shared artifacts, and keeps shared artifacts editable at both conference sites. For this, a new whiteboard software is also introduced, which allows a real-time synchronization of the generated artifacts. Finally, the functionality of two interconnected CollaBoard prototypes was verified in a usability assessment.

FP: Anderson Carlos Moreira Tavares,

Sérgio

Murilo Maciel Fernandes, Maria

Lencastre Pinheiro de Menezes Cruz (Brazil)

NHE: Collaborative Virtual Environment with Augmented Reality on Web

Interfaces in two dimensions, like buttons and menus, are been used for 35 years. Technologies have been developed to extend interfaces for tridimensional environment. One of them, called Augmented Reality, is being viewed due to the ease on interaction with the virtual environment. By other side, due to complexity of human tasks, people are getting together to perform tasks through collaborative groups, named groupware. This article proposes a system that does collaboration and has easy interactivity and immersion, by using augmented reality resources. This integration is not so easy to find out on current market, and it is a great motivation for this innovation. The project can help several areas like, for example, distance education, engineering, architecture and marketing. Results show the viability of the system, and its efficiency in applications that needs easy manipulation of projects and high degree of immersion of users, offering facility to activities at real time, without network congestion and in a collaborative way.

FP: Igor Kirillov, Stanislav Klimenko

(Russian Federation)

Plato’s Atlantis Revisited: Risk-informed,

Multi-Hazard Resilience of Built Environment via Cyber Worlds

Sharing

Resilience of the civil built environment is an ultimate mean for protecting the human lives, the private or public assets and biogeocenosis environment during natural catastrophe, major industrial accident or terrorist calamity. This paper outlines a theoretical framework of a new paradigm - "riskinformed, multi-hazard resilience of built environment" and gives a sketch of concept map for a minimal set of the shared (between different scientific and engineering cyber worlds) computational and analytic resources, which can facilitate a designing and maintaining of a higher level of the real built environment resilience.

Research Paper Presentation Details

Full Paper (FP) speakers will have 25 min for presentation and 5 min for Q&A. Short Paper (SP) speakers will have 15 min for presentations and 3 min Q&A.

Before the conference, the speakers will be asked to email their brief bios and photos to the Program Chair to publish them on the conference web page. Before the session, the speakers have to identify themselves to the session chair.

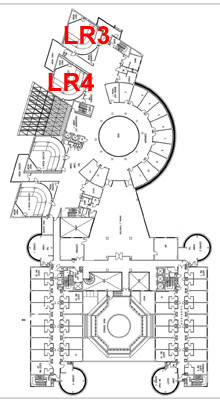

Each conference room is equipped with a personal computer running MS Windows XP and MS Office, a large screen projector, microphone and Internet access (wired and Wi-Fi). Each seat in LR rooms has personal power and internet sockets. Wi-Fi Internet will be available at any place of NEC for those delegates who stay at NEC. For those who stay downtown, we will make available a few PC connected to the Internet for checking emails. We will be also able to lend you a limited number of complimentary Wi-Fi accounts. The presenters will be able to use their own notebooks should they decide to do so. Alternatively, please bring along your files in Ms PowerPoint format on CD-ROM or USB drive. The files have to be copied to the computer before the respective session. Each conference room will be served by one of the student volunteers who will assist you with this.

2nd floor

3rd floor